There are several artificial intelligence (AI) players available in the market presently, such as ChatGPT, Google Bard, Bing AI Chat, and more. However, all these AI require an internet connection to interact with them. But what if you want to install a similar Large Language Model (LLM) on your computer and use it without an internet connection? In other words, a private AI chatbot that can work offline. Stanford’s recent release of the Alpaca model offers a solution that brings us closer to this possibility. You can now use a ChatGPT-like language model on your personal computer without the internet. Let’s understand how to operate an LLM locally without an internet connection

What is Alpaca and LLaMA?

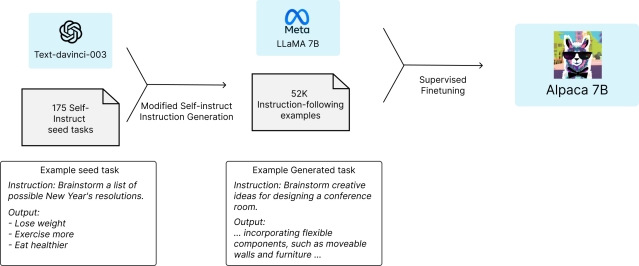

Alpaca and LLaMA are both AI language models. Developed by computer scientists at Stanford University, Alpaca is known for its small size and affordability, with just 7 billion parameters. Despite its small size, Alpaca performs as well as OpenAI’s text-davinci-003 model and can be run on a local computer without an internet connection. To train Alpaca, scientists fine-tuned it on LLaMa, a large language model created by Meta. The LLaMa model was trained using self-instruction data generated by OpenAI’s text-davinci-003 model. Stanford’s Alpaca LLM performed better than Meta’s LLaMA model in testing and is also fast

What Kind of Hardware Do You Need to Run Alpaca?

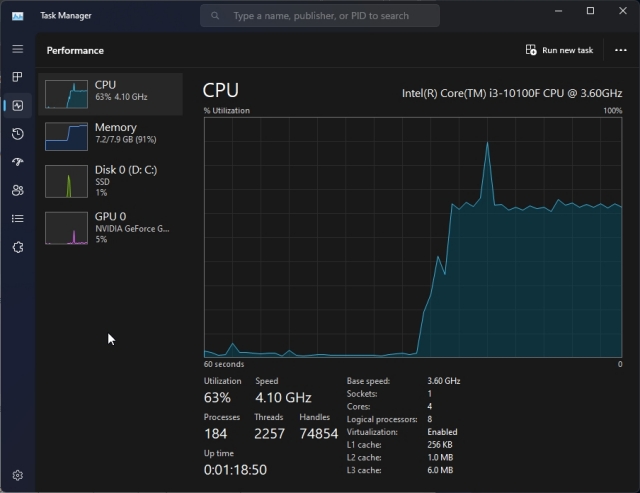

To operate Alpaca, one would require hardware that is suitable for running the software. Alpaca 7B can be utilized on a variety of machines. An individual who has installed Alpaca 7B on an entry-level PC, for example, reported that it worked efficiently. Their PC was equipped with a 10th-Gen Intel i3 processor, 256GB SSD, and 8GB RAM. They had opted for an Nvidia entry-level GeForce GT 730 graphics processing unit with a 2GB VRAM

Set Up the Software Environment to Run Alpaca and LLaMA

- Windows

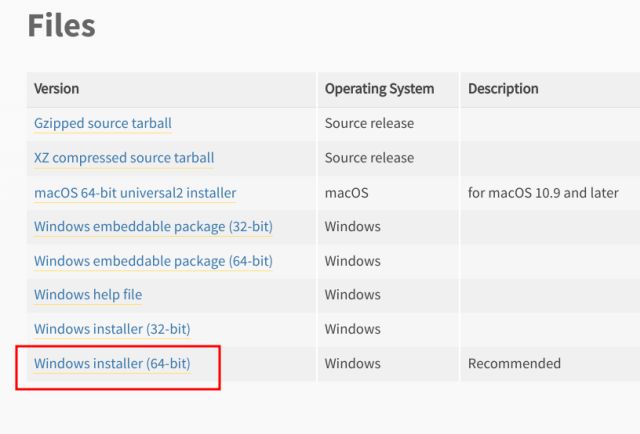

- Download Python 3.10 (or an earlier version) by visiting here and clicking on the “Windows installer (64-bit)” option.

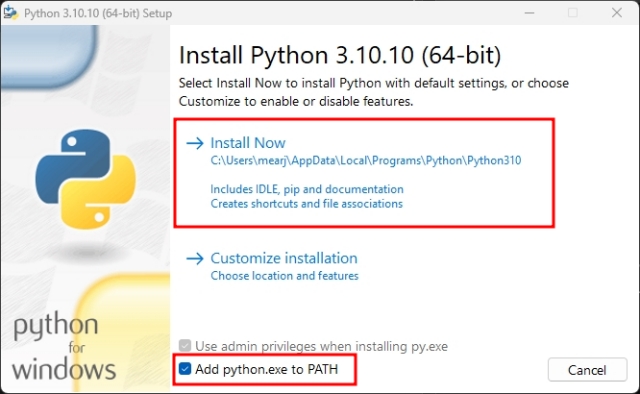

- Launch the setup file and select the checkbox next to “Add Python.exe to PATH.” Proceed to install Python with the default settings.

- Next, install Node.js version 18.0 (or higher) by downloading it from here and installing it with the default settings.

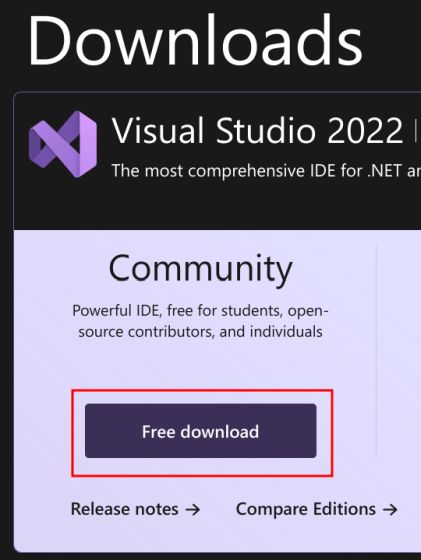

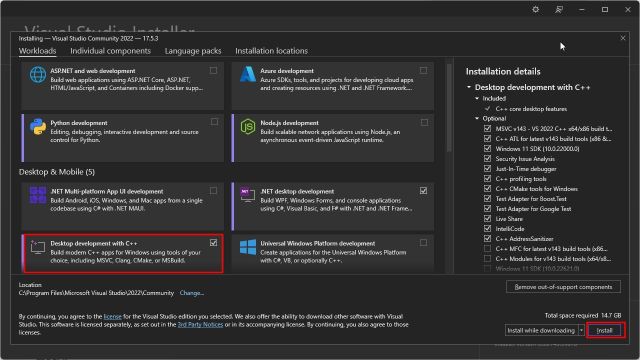

- Download the Visual Studio “Community” edition for free by visiting here.

- Launch the setup file for Visual Studio 2022, and ensure that “Desktop development with C++” is enabled in the subsequent window.

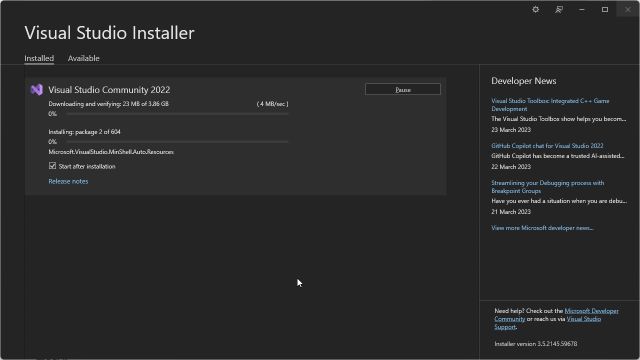

- Click “Install” and wait for the installation process to complete.

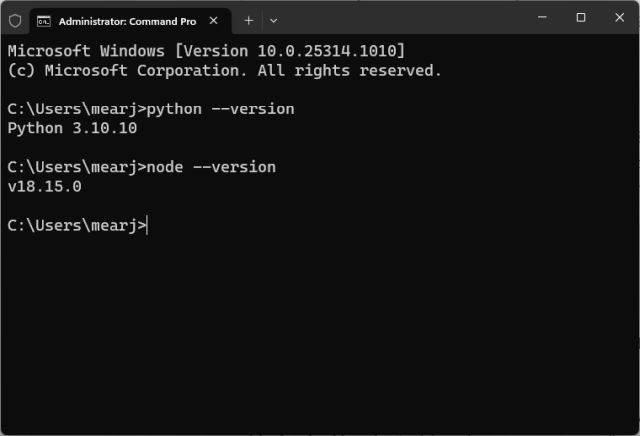

- It is recommended to restart the computer once everything has been installed. Open “Command Prompt” and run the following commands to verify that Python and Node.js have been successfully installed and to obtain their respective version numbers

python --version node --version

2. Apple macOS

Typically, macOS already includes a pre-installed version of Python, meaning that users only need to install Node.js (version 18.0 or higher) to begin using a large language model offline. Here are the steps to install Node.js on macOS:

- Download the Node.js macOS Installer (version 18.0 or above) from here.

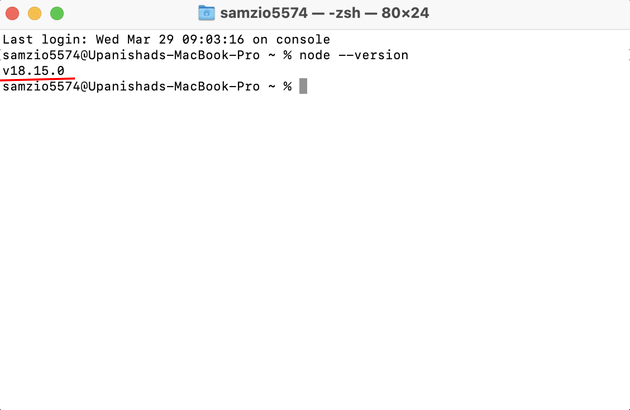

- Open the Terminal and run the following command to check whether Node.js has been installed correctly. If a version number is returned, then Node.js has been successfully installed.

node --version

Verify the Python version by running the following command, which should indicate Python 3.10 or an earlier version.

Verify the Python version by running the following command, which should indicate Python 3.10 or an earlier version.

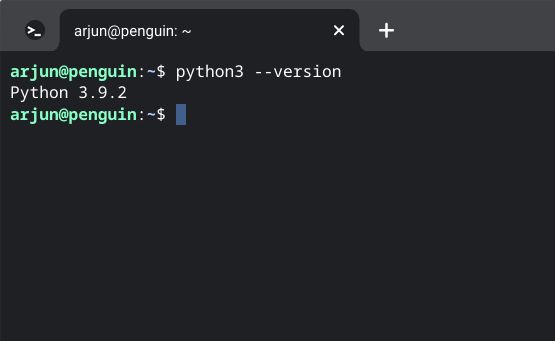

python3 --version

- If there is no output or the user has the most recent version of Python, then they should download Python 3.10 (or an earlier version) from the official website by clicking on the “macOS 64-bit universal2 installer” option and installing it on their Mac.

3. Linux and ChromeOS

To run Alpaca and LLaMA models offline on Linux and ChromeOS, it is necessary to set up both Python and Node.js. The following steps outline how to do this:

- Open the Terminal and use the command below to check the version of Python installed. If it is Python 3.10 or an earlier version, then the system is ready to use

python3 --version

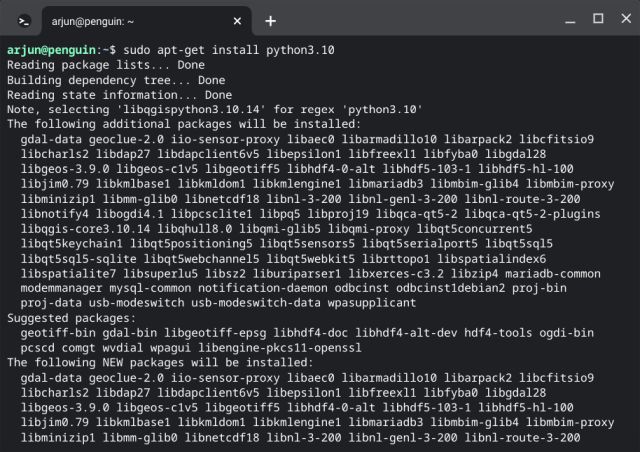

- In case the system has a higher version of Python installed, use the commands below to install Python 3.10 on Linux and ChromeOS.

sudo apt install software-properties-common sudo add-apt-repository ppa:deadsnakes/ppa sudo apt-get update sudo apt-get install python3.10

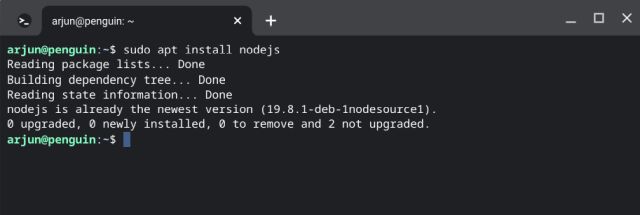

- Install Node.js after Python by executing the command below.

sudo apt install nodejs

- After installing Node.js, use the command below to confirm that the Node.js version is 18.0 or higher.

node --version

Install Alpaca and LLaMA Models On Your Computer

After installing Python and Node.js, you should proceed to install and run a language model similar to ChatGPT on your computer. Prior to installation, ensure that the Terminal can detect both the python and node commands

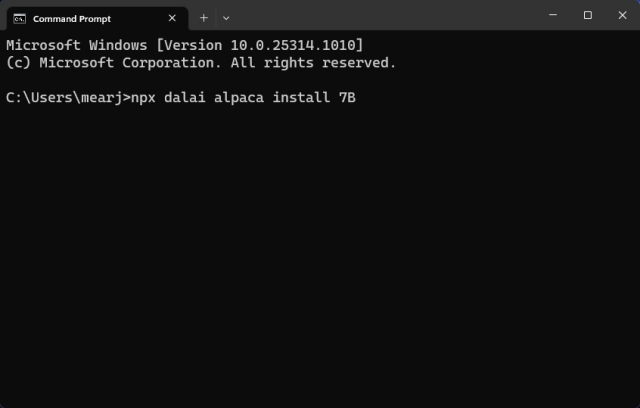

- Open your terminal and ensure that both Python and Node.js are detected. To install the

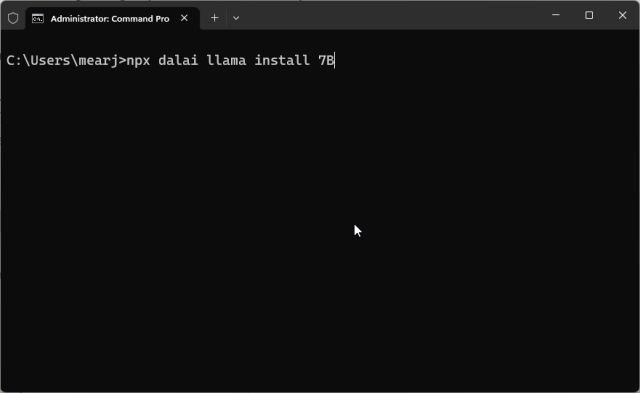

- Alpaca 7B LLM model, enter the following command into your terminal. If you want to install the larger 13B model, replace “7B” with “13B”. Keep in mind that the larger model requires more disk space (up to 8.1GB).

npx dalai alpaca install 7B

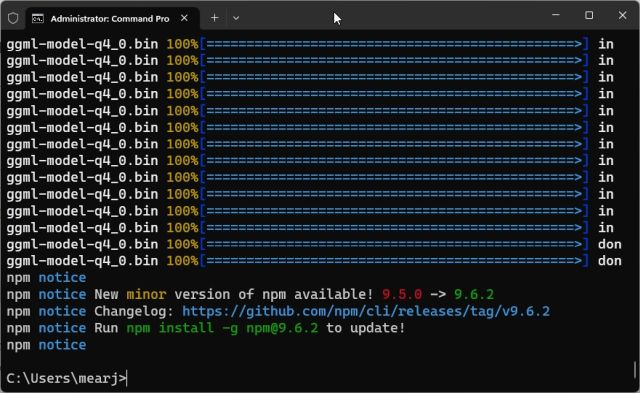

- After entering the command, type “y” and press Enter to start the installation process. This could take anywhere from 20 to 30 minutes depending on your internet speed and model size

- Once the installation is complete, you should see a confirmation message in your terminal.

- You have the option to install the LLaMA models or proceed to the next step and immediately test the Alpaca model. Keep in mind that the LLaMA model is significantly larger in size, with the 7B model requiring up to 31GB of storage space. To install it, execute the command below, replacing “7B” with “13B”, “30B”, or “65B”. The largest model may take up to 432GB of space.

npx dalai llama install 7B

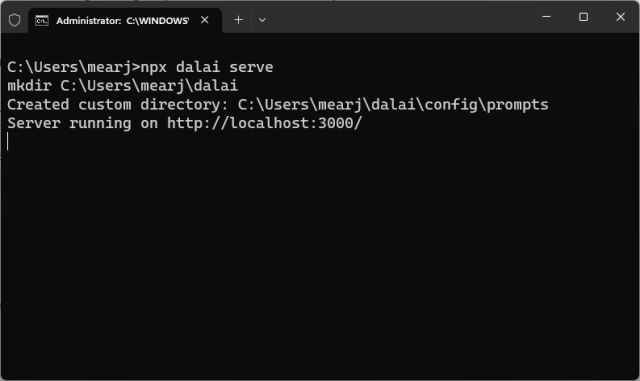

- After the installation is complete, start the webserver by entering the following command into your terminal:

npx dalai serve

- Open a web browser on your computer and navigate to the specified address. Here, you can test the Alpaca and LLaMA models locally without an internet connection.

http://localhost:3000

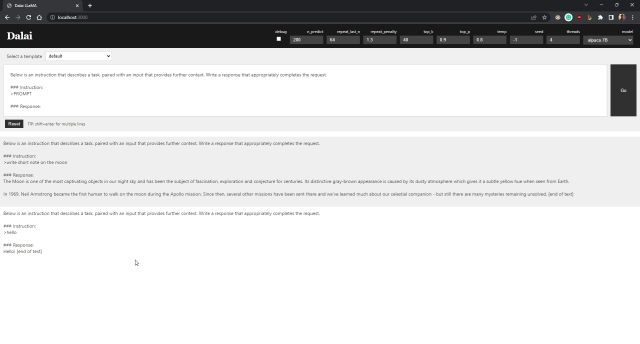

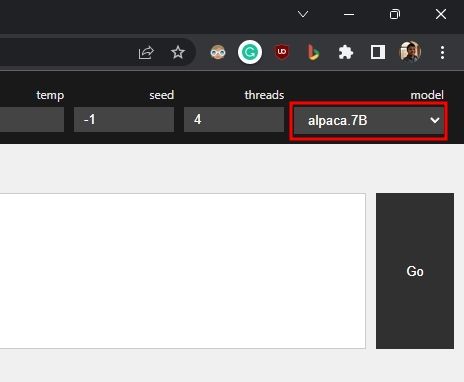

- In the top-right corner, select the desired model from the “model” drop-down menu, which includes the options “Alpaca 7B” or “LLaMA 7B”

- Once the setup is complete, you can utilize this language model on your personal computer even without an internet connection. Simply replace “PROMPT” with your desired query and click on “Go” to begin

- While running the local Alpaca LLM server, you can monitor the resource usage through your terminal.

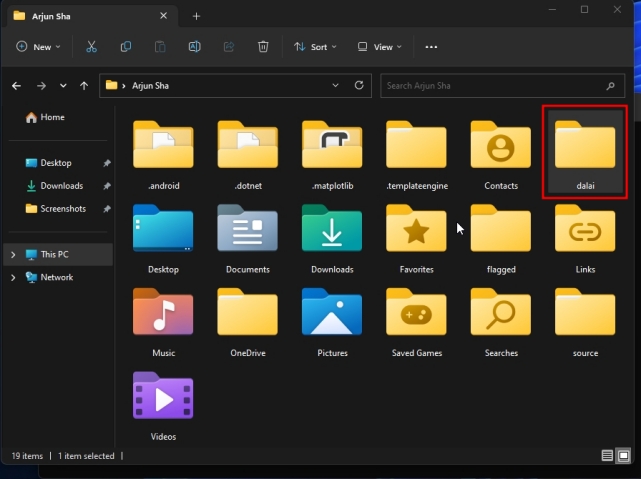

- To free up disk space by deleting the downloaded models, navigate to your user profile directory and delete the “dalai” folder which contains all the files, including the models.

Operate a ChatGPT-like language model in a manner that ensures both privacy and complete offline functionality

By following the given instructions, one can operate a ChatGPT-like language model privately and entirely offline, achieving satisfactory outcomes. In the future, more advanced and powerful language models may become accessible, which can be executed on devices ranging from smartphones to small-board computers such as Raspberry Pi.